In a recent architecture design meeting, our team tackled a tricky hybrid connectivity issue. We had a client running a Microsoft on-premises data gateway on AWS (yes, “on-premises” in the cloud!) to connect Microsoft Dataverse with Power BI. Initially, their setup left a critical gap: data traveling between Dataverse and Power BI was crossing the public internet. In this blog post, we’ll walk through the context of the problem, the solution we designed using Azure Relay with a Private Endpoint, the challenges we faced (including a surprising limitation around IP restrictions), and the lessons learned.

Context and Initial Problem

Our client’s architecture started with a Power BI service in the cloud and data sources in Dataverse, connected via an on-premises data gateway installed on an AWS EC2 instance. The gateway acted as the bridge between AWS and Power BI. However, because it was not originally configured with any private link or specialized networking, the gateway’s traffic to Azure was going out over the public internet

In simpler terms, the queries and data traveling from Dataverse (through the gateway in AWS) to Power BI were publicly exposed – encrypted, yes, but still traversing public networks.

This default behavior is by design: the on-premises gateway establishes an outbound connection to Azure’s cloud relay (Azure Service Bus/Relay) over the internet

That outbound-only connection is great for security in one sense (it means no inbound ports open on AWS) and is a commonly recommended setup

But our client’s security team was uneasy knowing that even outbound, their data was leaving AWS and Azure networks to travel across the public internet. They had attempted to use Private Endpoints in the initial deployment, hoping to keep all traffic internal, but ran into roadblocks – the gateway didn’t support Private Link out-of-the-box in that configuration, so they ended up with the default behavior (public internet traffic). The result: any data refresh or query from Power BI to Dataverse via the gateway was going through a public channel. For a sensitive enterprise environment, that was a big red flag.

Objective: Locking Down the Connection

The goal was clear: secure the communication path between the AWS-hosted gateway and the Power BI service with minimal exposure to public networks. In practice, this meant finding a way to keep the Dataverse-to-Power BI traffic on private infrastructure as much as possible. We envisioned a solution where the gateway could connect to Azure without traversing the open internet, ideally using something like a VNet integration or private tunnel between AWS and Azure.

Specifically, we set out to introduce an Azure Relay (the underlying Azure Service Bus Relay used by the Power BI gateway) under our control, configured with an Azure Private Endpoint. By doing so, the gateway’s traffic would be routed through a private IP interface in Azure, effectively keeping it on the Microsoft backbone network and off the internet

In essence, the Azure Relay service would be “brought into” a private Azure VNet, and our AWS gateway would talk to it over a private link. This setup would fulfill the objective: Power BI and Dataverse communications remain secure and private, with no data payloads observable on public networks (only metadata or control might still hit public endpoints, if any).

Another key requirement was to achieve this with minimal changes to the client’s existing setup. We didn’t want to reinvent their whole network; ideally, we’d reuse the gateway they already installed in AWS and just tweak how it connects to Azure. And of course, throughout this change, we needed to ensure that Power BI still functioned normally – meaning any solution had to be compatible with how Power BI interacts with on-premises data gateways.

Solution Approach: Azure Relay + Private Endpoint to the Rescue

After brainstorming and reviewing Microsoft’s documentation, we devised a plan to reconfigure the on-premises data gateway to use a custom Azure Relay (instead of the default one Microsoft auto-provisions). This approach is supported by the gateway: during installation (or via configuration), you can provide your own Azure Relay detailsWe set up an Azure Relay namespace in our Azure subscription and created a WCF Relay (NetTCP) endpoint dedicated to the client’s gateway. This gave us control over the relay’s network settings. We generated the required shared access keys (a “Send” key and a “Listen” key for the relay) and applied them to the gateway configuration so that the gateway would register itself on our Azure Relay endpoint

Essentially, we told the gateway: “Use this Azure Relay (in our Azure subscription) to communicate with Power BI.” With the gateway now pointing to our own Relay namespace, the next step was to secure that Relay with a Private Endpoint. In Azure, you can attach a private endpoint to many services – Azure Relay included – which assigns the service a private IP address in a chosen VNet

We created a Private Endpoint for the Relay in an Azure Virtual Network that we set up for this purpose. While configuring the Relay’s networking, we disabled its “Public network access” entirely and allowed it to be accessed only via the private endpoint (We did make use of the option to “Allow trusted Microsoft services” – more on that in a bit, since it became a crucial detail.) By disabling public access, we ensured that no one on the internet could hit this Azure Relay endpoint; the Relay could only be reached through the private IP within the Azure VNet.

Now, having a private endpoint means that the client’s AWS environment needed a way to reach that Azure private IP. We coordinated with their network team to set up a private network connection between AWS and Azure. In this case, the client established a site-to-site VPN from their AWS VPC to the Azure VNet (alternatively, they could use an ExpressRoute private peering if a dedicated circuit was available

This VPN allowed the EC2 instance running the gateway to resolve and connect to the Azure Relay’s private endpoint as if it were just another IP on their extended network. With DNS configured (we set up the necessary private DNS zone in Azure so the Relay’s namespace URL would resolve to the private IP), the gateway in AWS could now successfully connect to the Azure Relay entirely over a private network path.

In summary, we changed the data path from:

- Before: AWS gateway → (outbound over Internet) → Microsoft’s default Relay → Power BI

to:

- After: AWS gateway → (over VPN/private link) → Private Endpoint on our Azure Relay → (within Azure backbone) → Power BI

This re-routing through Azure Relay + Private Endpoint achieved the core goal: the link between AWS and Azure is now private. The only portion of the communication that still touches a “public” realm is the connection from the Power BI cloud service to the Azure Relay – and as we’ll explain, that part is inherently secured by Microsoft’s architecture.

Key Challenge: “Can We Lock It Down by IP?” – Not Exactly

One major challenge (and a point of confusion) arose when the client asked: “Can we restrict access to the Azure Relay by IP addresses as well, just to be extra safe?”. It’s a natural question – if we’ve gone to the trouble of making a private endpoint, perhaps we could also say “only our AWS VPN’s IPs, and maybe Power BI’s IPs, can talk to this Relay.” Azure Relay does support IP firewall rules to allow or deny traffic from specific IP ranges

In fact, when setting up the Relay’s network, we had two main options:

- Disable public network access (which we did, using the private endpoint).

- Or, leave public access enabled but add IP firewall rules to restrict who can call the Relay.

We effectively chose the first option (no public access at all, private endpoint only). However, even with “Public access: Disabled,” we noticed an important toggle: “Allow trusted Microsoft services to bypass this firewall.” We had set that to Yes during configuration

based on Microsoft’s guidance, to ensure Power BI would still be able to connect.

The client’s security team wondered if instead we could allow by IP – essentially, which IP addresses would Power BI use to call our Azure Relay? Could we just allow those? Here’s where Microsoft’s architecture has a firm answer: No, you can’t reliably restrict Power BI by IP address in this scenario. The Power BI service is a dynamic, multi-tenant cloud service. It doesn’t come from a fixed set of narrow IP addresses that you can pluck out and whitelist on your firewall (at least not in any practical or supportable way). In fact, Microsoft support has stated that you cannot restrict access to specific Azure services based on their public outbound IP ranges

Power BI will connect from the Azure datacenters and those IPs can change or be numerous; trying to chase them is not feasible (the published list of Azure datacenter IPs for Power BI is huge and not intended for this sort of filtering).

Moreover, in our configuration we completely turned off public access to the Relay – meaning the only way Power BI could reach it was via the “trusted Azure service” bypass. When “Allow trusted Microsoft services” is enabled, Microsoft essentially bypasses IP checks and instead uses the service’s identity to grant access. Power BI, being a Microsoft service, is automatically considered trusted and allowed through to the Relay even if you don’t explicitly whitelist an IP

If we turned that off, Power BI would be unable to connect to our Relay at all (since it was locked to private only). So, the challenge boiled down to educating the client that IP-based restriction for Power BI’s connection wasn’t just unnecessary, it was impossible given Microsoft’s design. The gateway and Relay were already secure by virtue of Azure’s authentication (using the shared keys and tokens), and the traffic is fully encrypted (TLS 1.2) in transit so trying to add an IP filter on top didn’t enhance security – it would only break functionality.

Microsoft’s explanation (relayed through documentation and our support channels) was essentially: “Power BI needs to access the Azure Relay over its standard channels, which are inherently secure. There’s an internal trust between the Power BI service and Azure Relay that we’ve built – you don’t need to (and can’t) micromanage it by IP.” In other words, by enabling that trusted services toggle, we were doing exactly what we should: trust Azure to know that Power BI is allowed. This was initially counter-intuitive to the client – after all, we talk so much about zero trust and locking everything down. But here, the trust is at the application/service level rather than IP. The Azure Relay only accepts connections with valid authentication tokens, and Power BI’s connection comes with that auth. Even if someone spoofed an Azure data center IP, they couldn’t talk to the Relay without the proper credentials. So, the security is in the keys and tokens, not the IP address.

Microsoft’s Architecture: Why Power BI Needs That “Public” Path (and Why It’s OK)

To elaborate on Microsoft’s architecture: the Power BI cloud service and the on-premises gateway communicate via Azure Relay as a middleman. Power BI places a request into the Relay, and the gateway pulls it from the Relay – kind of like passing a message in a bottle. In the default scenario (without our custom relay), this communication is entirely managed by Microsoft’s infrastructure. When we bring our own Relay and lock it down, we are taking on a bit of that responsibility, but Microsoft still handles the Power BI side of the connection. The Power BI service is not sitting in our VNet (we can’t invite the multi-tenant Power BI SaaS into our private network). So it reaches the Relay through Azure’s network, which from our point of view is “public” (since it’s not inside our VPN).

However, “public” doesn’t mean “untrusted” here. Azure Relay is designed for cloud-to-on-prem secure communication. By default, any connection to it requires a shared access signature (SAS) token or key – which our gateway and the Power BI service have to possess. Microsoft has documented that as long as a request comes with valid authentication and authorization, the Relay will accept it

What we did with the private endpoint was restrict where those requests can come from. We basically said: only allow connections via our private endpoint (and from trusted services). So the Power BI service’s connection is recognized as a trusted service connection (it’s coming through Azure’s backbone, not from some random IP on the internet), and it’s allowed. From Power BI’s perspective, nothing really changed – it still connects to “some Azure Relay URL” and sends data. From our perspective, we gained the assurance that the gateway’s side of that connectivity is as private as possible.

Microsoft has a concept of “trusted Microsoft services” which includes things like Power BI, Azure Data Factory, Logic Apps, etc., when they interact with certain Azure resources. By allowing these, we offload the trust to Azure’s identity management. The Power BI service authenticates itself when connecting to the Relay, so Azure knows “this is Power BI, let it in (even if it’s not coming from an IP in a specific list).” The rationale is that IP addresses are a brittle way to secure a service, especially one that operates at cloud scale. Instead, Microsoft built an inherent trust model where the service’s identity and the secure token handshake are what gates the access.

To put it simply: Power BI needs “public” access to the Azure Relay because it’s not inside your private network – but that access is secure by design. Our configuration leveraged that design. We allowed Power BI to do what it needs to do (reach the Relay), and in return we got the benefit of a fully working system without punching random holes for IP ranges that might change. Once this was understood, the client was comfortable moving forward without insisting on an IP whitelist for Power BI. (It also helped to point out that all traffic between the gateway and Power BI was encrypted via TLS 1.2 and authenticated; even when it was “public” previously, it wasn’t in plain text

Outcome and Lessons Learned

The end result was a success: we achieved a fully functional Power BI data refresh scenario with a secured network path between AWS and Azure. The on-premises data gateway (running in AWS) now connects to an Azure Relay over a private VPN link, and the Power BI service connects to that Relay through Azure’s backbone (as a trusted service). We tested end-to-end: Dataverse data was queried via the gateway, and Power BI datasets refreshed without issue. From a network perspective, we ran packet captures and verified that the gateway’s traffic was going through the VPN tunnel to Azure, and not out to the general internet. 🎉

Some key takeaways and lessons learned from this implementation:

- Azure Relay + Private Endpoint is a powerful combination for hybrid scenarios: It allowed us to eliminate exposure to the public internet for the AWS-to-Azure leg of the journey

If you’re dealing with on-prem (or AWS/GCP) to Azure service communications, consider using Private Endpoints to keep traffic on a private network. Just ensure you have the network connectivity (VPN, ExpressRoute, etc.) in place to support it.

- “Bring Your Own Relay” is supported and works well: Configuring the on-premises gateway to use a custom Azure Relay was straightforward and is documented by Microsoft

This give you control over the relay location and settings (like networking). Just remember that each gateway uses a unique Relay endpoint URI and you’ll need to manage the keys securely.

- Understand the limitations of IP-based filtering for cloud services: Not all security can (or should) be enforced with IP firewalls. In our case, trying to restrict the Power BI service by IP wasn’t feasible – Microsoft’s cloud services don’t come with a guarantee of fixed IPs that you can pin down easily, and they expect you to use the “Allow trusted Microsoft services” mechanism

The lesson for future projects is to leverage the cloud’s native trust features (like service tags, private links, identity-based access) instead of forcing IP rules, especially when dealing with SaaS services like Power BI.

- Private Endpoint doesn’t mean everything is private: There might still be a “public” component needed, and that’s okay. In this solution, the Azure Relay’s private endpoint covered the gateway’s connection, but the Power BI service’s connection was essentially through a “public” interface of Azure (even though we disabled public access, the trusted services bypass functions like an internal door). The lesson is to identify which part of the communication you’re securing and not to panic if some cloud-to-cloud communication remains – if it’s service-to-service with proper auth, it’s already secure. Our initial instinct was “we want everything private,” but we learned to nuance that: we got the critical part private, and we relied on Microsoft’s security for the rest.

- Communication and documentation are vital: We had to clearly explain to the client’s teams why certain things were done (like enabling the trusted services, not using IP whitelist). It reinforced how important it is for architects to not only implement a secure solution but also educate stakeholders on the reasoning. In hindsight, providing Microsoft’s own documentation snippets about not restricting Azure services by IP was extremely helpful to get everyone on the same page

- Test early, test often: We encountered a few hiccups in setting up the private DNS and the VPN routes. Early testing caught issues like the gateway not being able to resolve the Relay’s name because we needed a DNS zone for privatelink.servicebus.windows.net (since Azure Relay private endpoints use a special DNS zone). We also discovered the need to open additional outbound ports for the Relay’s private endpoint (Azure Relay with private link may require ports 9350-9354, or in our case we saw some recommendations for 9400-9599 for certain scenarios

By testing connectivity and reading Azure’s notes, we caught these and adjusted firewall rules accordingly.

In the end, the client’s security box was checked ✅: no sensitive data-in-transit flowing over the public internet between AWS and Azure. And the performance was solid – we didn’t notice any significant difference in refresh times; if anything, the latency was a bit more consistent via the private link.

Architecture Diagram: Before vs. After

To visualize the changes, let’s look at simplified architecture diagrams of the setup before and after the solution was implemented.

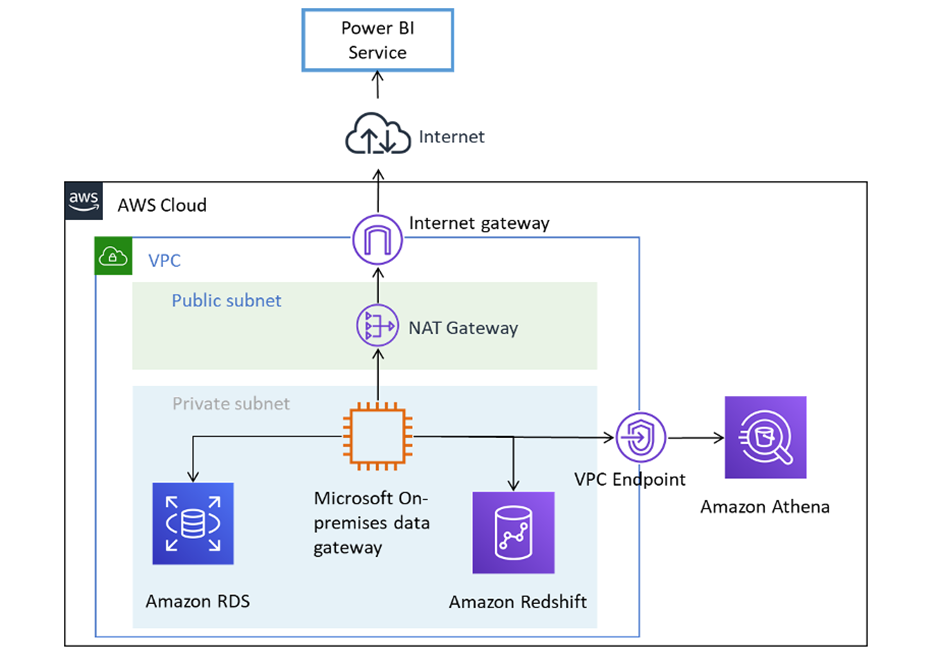

Before: Initially, the on-premises data gateway (running on an AWS EC2 instance) communicated with the Power BI cloud service over the public internet. The gateway, located in a private subnet of a VPC, would send outbound traffic through an AWS NAT Gateway to reach Azure’s Relay (Service Bus) in the cloud. This meant the traffic from AWS to Azure (and back) traveled via the internet (depicted by the cloud icon), albeit encrypted. The data sources in AWS (e.g., Amazon RDS, Redshift, etc., represented in purple) stayed in the private subnet, and only the gateway’s connection to Power BI traversed the internet. This was functional but exposed that cross-cloud link to external networks.

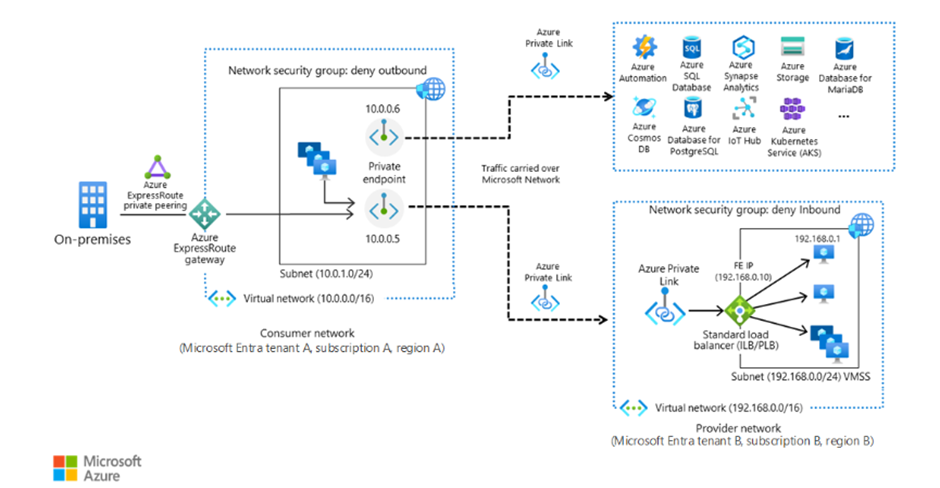

After: With the new solution, the gateway’s connection to Azure is through a Private Endpoint over a VPN/ExpressRoute. The Azure Relay lives in a VNet with a private IP (shown as the lock icon in the Azure Private Link box), and the AWS gateway’s traffic is tunneled directly to Azure through a secure connection (no internet). All Azure-bound traffic from AWS goes into the VPN (or ExpressRoute circuit) and terminates in the Azure VNet, where it hits the private endpoint of the Relay. Power BI’s service (on the right) still connects to the Relay, but that connection is handled within Azure’s network (trusted service). Essentially, the public internet is no longer in the path between the AWS data sources and Power BI; the path is AWS -> Azure private network -> Azure service. The dotted line to the Power BI icon indicates that Power BI’s queries reach the private endpoint internally. This “after” diagram shows a much more secure posture: any communication between AWS and Azure stays within known private channels (AWS’s network + Microsoft’s network), greatly reducing exposure.

Takeaways for Future Implementations

Designing hybrid cloud connectivity can be complex, but a few guiding principles can help ensure security and functionality:

- Leverage Cloud-Native Features: Use services like Azure Private Link to keep traffic off the public internet whenever possible. Azure Relay, Azure Service Bus, Azure Storage – many of these support private endpoints now

This can eliminate whole classes of network risk without adding much overhead. Just remember that when you use Private Link, you’ll need to handle cross-network connectivity (VPN, ExpressRoute) and DNS configuration so your on-prem or other cloud environment knows how to reach the private endpoint.

- “Bring Your Own” Hybrid Connectivity: If a service allows you to bring your own integration point (like bringing your own Azure Relay for the Power BI gateway), consider it. It gives you more control. In our case, bringing our own Relay meant we could place it in a specific region, under specific networking rules, which wouldn’t have been possible with the default globally managed relay.

- Understand Service Trust Models: When dealing with SaaS services (Power BI, Dynamics 365, etc.), investigate how they authenticate and connect to resources. Often, you’ll find options like “Allow trusted Microsoft services” or service tags that abstract the actual IPs. Use these features – they exist because Microsoft has established a secure identity-based trust. It’s usually better than maintaining a huge list of IP addresses. Plus, as we learned, some services simply won’t work with IP whitelisting designing with the service’s supported model will save you headaches.

- Don’t Over-Secure to the Point of Breaking: It’s all about balance. Our client initially wanted to lock down by IP in addition to everything else. It’s an understandable impulse, but you need to align with what’s technically achievable and supportable. Sometimes adding another layer of “security” (like an IP block) doesn’t actually increase security much (if at all) but can undermine reliability or functionality. Always ask: What risk does this control actually mitigate? Is that risk already handled by another control? In our case, encryption and token auth were already mitigating the risk of unauthorized access, so IP filtering Power BI would have been redundant.

- Documentation and Support are Your Friends: We often dove into Microsoft Docs and even forums to double-check behaviors (for example, ensuring that Power BI was indeed covered under “trusted services” for Azure Relay, or what ports are needed for Relay over VPN). When doing something a bit off the beaten path, it’s worth checking vendor documentation or reaching out to support to validate your approach. This can prevent a lot of trial and error.

- Plan for End-to-End Testing: In a multi-cloud or hybrid setup, many things can go wrong – DNS, routing, firewall rules, authentication, etc. We benefited from having a testing plan that checked each piece (could the gateway connect to the Relay? Could Power BI talk to the gateway? What happens if the VPN drops? etc.). This holistic testing mindset is critical. It’s much easier to adjust the design in the early stages than to find out after deployment that something’s not working right.

In conclusion, securing the Dataverse-to-Power BI traffic path required a mix of cloud networking savvy and understanding how Power BI’s gateway architecture works. By introducing an Azure Relay with a private endpoint, we created a secure tunnel for data to flow between AWS and Azure, satisfying the client’s requirements without sacrificing functionality. We also learned firsthand that sometimes the cloud already has the solution (trusted services and encrypted channels) and we just need to plug into it rather than reinvent it.

For technical architects designing similar solutions, the main insight is to use the building blocks provided by the cloud platforms (like Azure Relay, Private Link, VPN connectivity) and configure them in a way that balances security and practicality. And always remember to verify why something is needed – as we saw with the IP restriction question, sometimes the best answer is “you don’t actually need to do that.”

Closing thought: Hybrid cloud integrations can indeed be done securely. The key is understanding the flow of data and securing each hop appropriately – sometimes with technology, sometimes with trust. In our case, once the solution was in place, the client’s Dataverse and Power BI could chat away confidently, with us architects breathing a sigh of relief that those chats weren’t happening in public view. Secure, efficient, and cloud-powered – just the way we like it.